Shaping the Tools of AI

A Research Essay Investigating the Impacts of AI

2023

Introduction

Artificial intelligence has been in the minds of humanity since the 1950s when Alan Turing first conceived the idea that machines could think (Anyoha). Recent developments such as Large Language Models (LLMs) like ChatGPT and LaMDA, as well as other generative AI models like OpenArt, bring into question the impacts of these technologies. LLMs function by being trained on large swaths of text “fed” to them. They use this text to generate a wide variety of genres when prompted and can learn from the commands issued by the user. The ethics surrounding the way AI “learns” is highly debated, as well as the consequence of using this technology as a replacement for human authorship.

AI can benefit many sectors, including education and healthcare, by generating content that is tailored to the consumer without requiring a great amount of work on the producer's part. AI also has tremendous programming potential with its code writing abilities (De Angelis). For writers and researchers, it can suggest research questions, form drafts and aid in grammar choices. AI is already being used to write essays, summaries, reports and computer code and it is believed that it will eventually evolve to design scientific experiments and conduct peer review as well as write manuscripts and perform editorial decisions to accept or reject manuscripts (Van Dis, 2023). In their Editorial “ChatCPT: Five Priorities for Research” Van Dis et al believe that the benefits of this could “Make science more equitable and increase the diversity of scientific perspectives.” (Van Dis, 2023)

Figure 1 Credit: Microsoft New Center

Thus, the main concerns for the use of AI are its impacts on education, research, and the arts. Art and language are some of the most fundamental aspects of being human. Humans make art in the most challenging times of our lives to cope, process and endure. As a species, art and language was something we adapted into by tapping into higher cognitive function (Morriss-Kay, 2009). But this evolutionary trait is something we need to continue to use.

The United States has seen a decline in both literacy and college students enrolling in the humanities, including art and English (Nathan Heller). The decline in literacy predates Covid-19 and represents a much larger issue within the education system that needs to be addressed (Carr). To exacerbate this by introducing a technology that is meant to circumnavigate the smaller tasks of writing without having policies in place is unwise. One must stop to think about the consequences of removing so much of humanity and genuine human connection from our lives. We are wholesaling our literacy skills at the price of the next great technology. No matter your stance on AI there is no pulling the plug on this technology so I propose looking at what can be done to protect writers, artists, and the humanities from being replaced by AI?

Educational Applications

The educational sector can improve by using AI content when considering the needs of educators and students. Although it seems as if this is the area most likely to be threatened by AI, it can be used as a tool to provide personalized learning experiences for students (Gill, 2023). It is important when considering using AI generated content in a lesson plan to ensure it is beneficial for the teacher and student’s development. This should be done through educator training and targeted implementation in the classroom. By ensuring teacher education and structuring lesson plans for teaching this new literacy, it can be used as a tool instead of a replacement for thought (Gill, 2023).

Professor Tara Brabazon, Dean of Graduate Research and Professor of Cultural Studies at Charles Darwin University, has an excellent strategy for teaching information literacy as a way to combat plagiarism (Brabazon, 2015). I believe that much can be learned from Brabazon’s assertion because the struggle to combat plagiarism in academic writing mirrors the struggle to deter the use of ChatGPT in academic writing. At their core, both issues involve the least effort and easiest route to perceived success being combated by the use of software.

In “Turnitin? Turnitoff: The Deskilling of Information Literacy” Brabazon says that the solution is not in the use of software to combat poor academic integrity, but to implement information literacy practices. She believes “A key strategy that costs nothing except academic staff time and their professional development is the creation of an information scaffold in every subject, in every semester in a degree.” (Brabazon, 2015, p. 15) A targeted approach to teaching students' information literacy is the first step in accomplishing academic integrity. This same principle should be applied when using AI in the classroom and introduced much earlier than college level. If knowledge on how to responsibly use AI, as well as education on the issues involved with using it are taught, then AI can act as a tool and not a crutch for the academically lazy.

The benefit to having an AI program write the structure of an essay or research document is that it allows more time for the author's reflection on the subject. This could allow for more development of personal voice in writing if most of the essay's structure, such as syntactic and grammar choices, was predetermined. There could be a greater emphasis on thinking when this technology is structured into education (Gill, 2023). This could place a higher value on media and literature created with a genuine human voice.

Marshall McLuhan, Canadian philosopher, and media savant claimed that each new technology renders its predecessor into an art form (McLuhan, 2015, p.13). It is possible that the use of AI to generate written content will cause genuine human writing to come at a premium and hold greater value. There could be a greater emphasis on the thoughts, opinions, and personality of the writer. The dryness of academic papers can be replaced with genuine thoughts, anecdotes, and metaphors since these are the features of writing that LLM struggle to replicate with accuracy. The type of rubric that would value genuine thought over the basic structure of an essay would open the opportunity for greater thought for the writer as well as ensure the paper was not written by a robot.

However, this does bring up the concern that once essay structure ceases to be taught as part of a language arts curriculum, essays then become a click and edit activity without any educator intervention. The origin and definition of the word essay is “to try”; it is meant to be a call for students to try to apply their knowledge and AI would render that definition null if it were overused in the classroom without proper policy in place. In their article “Impact of artificial intelligence on human loss in decision making, laziness and safety in education” Ahmed et al raise a crucial point about the role this technology has on the brain, stating “When the usage and dependency of AI are increased, this will automatically limit the human brain’s thinking capacity. This, as a result, rapidly decreases the thinking capacity of humans. This removes intelligence capacities from humans and makes them more artificial. In addition, so much interaction with technology has pushed us to think like algorithms without understanding.” (Ahmed, 2023, p.4) We would essentially be innovating ourselves out of literacy. Educators have a huge role in shaping how these tools are used through the literacies they teach their students.

Plagiarism and Copyright

A large concern for large language models and other AI software is the threat of plagiarism. Because these models recycle and generate from content they have consumed, there is little to no recourse for the original artists, writers, or the companies that support them. Getty Images began legal proceedings against Stability AI earlier in 2023 due to copyright infringement on intellectual property (Getty). Getty released a statement on January 17th, 2023, stating “Stability AI unlawfully copied and processed millions of images protected by copyright and the associated metadata owned or represented by Getty Images absent a license to benefit Stability AI’s commercial interests and to the detriment of the content creators.” (Getty, 2023) This was not the only move against generative AI, Artist Sarah Anderson, Kelly McKernan, and Karla Ortiz also sought to sue over copyright infringement, however that lawsuit is mired with difficulties and technicalities. A judge dismissed the case against Stability AI, Midjourney and DeviantArt due to the implausibility of proving that copyright infringement had indeed occurred (Anderson Vs Stability AI, 2023).

Often there is criticism about how artists pushing back are resistant to technology. But there is little consideration that the copyright laws governing robots are far less strict than those governing people; they allow generative AI to take an artist’s livelihood, a person who needs to eat and make a living, and allow a robot to copy their styles in the name of progress. This allows no recourse for the artist under fair use laws. The difficulty with lawsuits that claim that AI is using works by human artists without consent is that the burden of proof is on the artist. Artists must have their work copyrighted and must also prove that their work is being directly used, such as in the case with Anderson vs Stability AI.

Additionally, there is concern on whether content produced by generative AI can or should be copyrighted. Current copyright laws, such as the Copyright Act of 1976, seem outdated compared to the quickly evolving technology. However, the current compendium of copyright practices released by The United States Copyright Office in 2021 does demonstrate that the office emphasizes the need for human authorship to be eligible for copyright:

“To qualify as a work of ‘authorship’ a work must be created by a human being. See Burrow-Giles Lithographic Co., 111 U.S. at 58. Works that do not satisfy this requirement are not copyrightable….Similarly, the Office will not register works produced by a machine or mere mechanical process that operates randomly or automatically without any creative input or intervention from a human author. The crucial question is “whether the ‘work’ is basically one of human authorship, with the computer [or other device] merely being an assisting instrument, or whether the traditional elements of authorship in the work (literary, artistic, or musical expression or elements of selection, arrangement, etc.) were actually conceived and executed not by man but by a machine.” (U.S Copyright Office, 2021 Chapter 300 : 22 )

USCO also released a statement in the Federal Register in March 2023 on a ruling that resulted in the rejection of a copyright request in 2018 where the text was completely authored by AI. The request was rejected on the grounds it held no “creative contribution from a human actor.” The same report also details a case in February 2023 of a graphic novel that was authored by a human, but the art was AI generated. In this case, the OSCO ruled that the writing could be protected by copyright but not the artwork (USCO, 2023).

If a script cannot be copyrighted, then the show is not subject to copyright. Also, the use of AI generative video to capture and reuse an actor's likeness would equally render media unable to be copyrighted. This is potentially a motivator for large media companies to continue to employ writers and artists if only to have exclusive rights to their content. This does give hope to artists and writers who fear that they can be replaced, as does the WGA agreement that ended the 2023 WGA strike. The agreement states AI cannot write or rewrite material, writers are given a choice if they want to use AI technology, the company must disclose if any AI source material is provided to the writer as well as “assert that exploitation of writers’ material to train AI is prohibited by MBA or other law (WGA, 2023).

Van Dis et al. also calls for the review of legalities concerning who owns the rights to AI generated content, considering there are many possibilities (Van Dis, 2023). Is it the creator of the AI technology? The creator of the prompt that generates the text? Or the person who wrote the original written content used to train the AI? The legal definition of “authorship” will certainly need to be reevaluated for the era of AI.

It is imperative that the copyright laws continue to support the human aspect of art, literature, and media. Further laws also need to be passed to ensure that corporations do not forsake individuals over profits, as is frequently the case. These laws should protect and ensure transparency for publishers and writers who use AI to produce their work. Van Dis proposed that the research community and publishers work out how to use generative AI with “integrity, transparency and honesty.” (Van Dis, 2023) Although this is harder said than done, considering most of corporate America is not set up for such transparency. It is probable that without laws clearly detailing the limits of copyright and required transparency for use, this technology could have tremendous negative impact. Van Dis recommends the use of “Author-contribution statements and acknowledgments in research papers should state clearly and specifically whether and to what extent authors used AI technologies such as ChatGPT in the preparation of their manuscript and analysis.” (Van Dis, 2023, p.225)

Infodemic

I worked on Google's LAMDa earlier this year as an AI optimization specialist. I had to compare prompts given to the system and analyze two responses given back to me. It was disconcerting that some of the content it generated sounded accurate but was not. And this leads me to my final ethical concern, the infodemic. In his article “OpenAI’s new chatbot can explain code and write sitcom scripts but is still easily tricked” for The Verge, James Vincent also expresses concern about how easily chatbots can be tricked, explaining “the bot often confidently [presents] false or invented information as fact…this is because such chatbots are essentially “stochastic parrots” — that is, their knowledge is derived only from statistical regularities in their training data, rather than any human-like understanding of the world as a complex and abstract system.” (Vincent, 2022)

In their editorial, Van Dis et al note that AI has the potential to “Degrade the quality and transparency of research and fundamentally alter our autonomy as human researchers. ChatGPT and other LLMs produce text that is convincing, but often wrong, so their use can distort scientific facts and spread misinformation.” (Van Dis, 2023) The internet is already besieged by false information, with misleading or fake information being spread faster and faster due to the virality of the internet environment and high emotion events such as the covid pandemic, 2020 election and the Russian war with Ukraine.

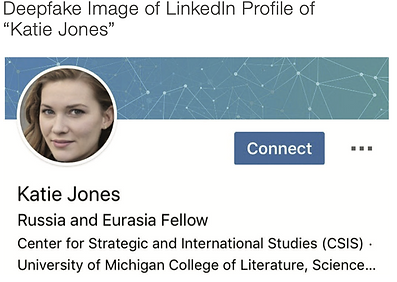

In his report “Artificial Intelligence, Deepfakes, and Disinformation: A Primer” for RAND Corporation, Senior Behavioral Scientist Todd C. Helmus also expresses concern about the potential for AI to contribute to the already chaotic battlefield of internet misinformation. Helmus discusses the use of “deepfake photos” or photos that are rendered by generative AI and can be used to create fake media accounts. A disturbing case he discusses is seen in figure 2, which was a part of an espionage operation. Helmus states that the profile “was connected to a small but influential network of accounts, which included an official in the Trump administration who was in office at the time of the incident.” (Helmus, 2022, p.4) according to Helmus, deepfakes give nearly anyone the power to manipulate elections, exacerbate social divides, lower trust in authorities and institutions and undermine journalism and trustworthy sources as well as cause “an overall declining trust in media” (Helmus, 2022, pp. 6-7) Unfortunately this is an issue that has no clear solution beyond educating users to be cautious about sources and adding members on social media that could potentially have nefarious purposes.

Figure 2 Credit: Todd C. Helmus

Conclusion

John Culkin, Director of the Center for Communications at Fordham University, once claimed that “We shape our tools and therefore our tools shape us (Culkin, 1967, p. 70). This is absolutely the case with these evolving AI technologies, and the shaping of them needs to be intentional with a mind towards progress, not profit. Ultimately, the best way forward is to fortify the already flagging education system, introduce information literacy early and adjust the copyright laws as this innovative technology emerges.

In the future, more legislation is needed to address the ethical and economical concerns presented by AI. In the meantime, a well-educated public that can use the technology as a tool and maintain transparency about its use is paramount. Requiring the labeling of materials produced by AI is another key step as well. This gives users who are hesitant to interact with AI work an opportunity to do so and not feel as though they are being tricked. Additionally, it will also create better parameters for datasets in the future, as we move forward with AI so changes in socialization, literacy and any other unforeseen aspects can be easily identified. Marshall McLuhan warned that “The hidden aspects of the media are things that should be taught because they have an irresistible force when invisible. When these factors remain ignored and invisible, they have an absolute power over the user” (NotPercy203, 2016, 20:10) We should take care, as consumers and producers, to understand the implications and architectures of these modern technologies.

References

Ahmad, S.F., Han, H., Alam, M.M. et al. (2023). Impact of artificial intelligence on human loss in decision making, laziness and safety in education. Humanities and Social Sciences Communications (10) 311. https://doi.org/10.1057/s41599-023-01787-8

Anderson Vs Stability AI (https://copyrightlately.com/pdfviewer/andersen-v-stability-ai-order-on-motion-to-dismiss/?auto_viewer=true#page=&zoom=auto&pagemode=none October 30, 2023).

Anyoha, R. (2020, April 23). The history of Artificial Intelligence. Science in the News . https://sitn.hms.harvard.edu/flash/2017/history-artificial-intelligence/

Brittain, B. (2023, January 17). Lawsuits accuse ai content creators of misusing copyrighted work. Reuters. https://www.reuters.com/legal/transactional/lawsuits-accuse-ai-content-creators-misusing-copyrighted-work-2023-01-17/

Brabazon, T. (2015, July). Turnitin? Turnitoff: The Deskilling of Information Literacy. Turkish Online Journal of Distance Education, 16(3). https://doi.org/10.17718/tojde.55005

Carr, P. (2023, June 22). U.S. reading and math scores drop to their lowest levels in decades. NPR. https://www.npr.org/2023/06/22/1183653578/u-s-reading-and-math-scores-drop-to-their-lowest-levels-in-decades

Culkin, J.M. (1967, March 18). A schoolman’s guide to Marshall McLuhan. Saturday Review, pp. 51-53, 71-72. https://webspace.royalroads.ca/llefevre/wp-content/uploads/sites/258/2017/08/A-Schoolmans-Guide-to-Marshall-McLuhan-1.pdf

De Angelis, L., Baglivo, F., Arzilli, G., Privitera, G. P., Ferragina, P., Tozzi, A. E., & Rizzo, C. (2023). ChatGPT and the rise of large language models: The new AI-driven infodemic threat in Public Health. Frontiers in Public Health, 11. https://doi.org/10.3389/fpubh.2023.1166120

Getty Images. (2023, January 17). Getty Images statement. Newsroom. https://newsroom.gettyimages.com/en/getty-images/getty-images-statement

Gill, S. S., Xu, M., Patros, P., Wu, H., Kaur, R., Kaur, K., Fuller, S., Singh, M., Arora, P., Parlikad, A. K., Stankovski, V., Abraham, A., Ghosh, S. K., Lutfiyya, H., Kanhere, S. S., Bahsoon, R., Rana, O., Dustdar, S., Sakellariou, R., Uhlig, S., & Buyya, R. (2023). Transformative effects of ChatGPT on modern education: Emerging Era of AI Chatbots, Internet of Things and Cyber-Physical Systems, (4), https://doi.org/10.1016/j.iotcps.2023.06.002

Heller, N. (2023, February 27). The end of the English major. The New Yorker. https://www.newyorker.com/magazine/2023/03/06/the-end-of-the-english-major

Helmus, Todd C., Artificial Intelligence, Deepfakes, and Disinformation: A Primer. Santa Monica, CA: RAND Corporation, 2022. https://www.rand.org/pubs/perspectives/PEA1043-1.html.

Mcluhan, M. (2015). Understanding media: The extensions of man. Critical edition (W. T. Gordon, Ed.; Critical edition). Gingko Press.

Microsoft News Center & IDC (May 2, 2018) Brave New World – How AI Will Impact Work and Jobs Microsoft News Center. Microsoft. https://news.microsoft.com/en-hk/2018/05/02/brave-new-world-how-ai-will-impact-work-and-jobs/ Accessed on October 7, 2023

Morriss‐Kay, G. M. (2010). The evolution of human artistic creativity. Journal of Anatomy, 216(2), 158–176. https://doi.org/10.1111/j.1469-7580.2009.01160.x

NotPercy203 (2016, Jan 2). Marshall McLuhan - The Medium Is The Message [1977] (Media Savant). YouTube. Retrieved October 10, 2023, from https://www.youtube.com/watch?v=UoCrx0scCkM.

Van Dis, E. A. M., Bollen, J., Zuidema, W., van Rooij, R., & Bockting, C. L. (2023, February 3). Chatgpt: Five priorities for Research. Nature News. https://www.nature.com/articles/d41586-023-00288-7

USOC (2023). Copyright Registration Guidance: Works Containing Material Generated by Artificial Intelligence. 16190 Federal Register, 88(51). https://www.copyright.gov/ai/ai_policy_guidance.pdf

U.S Copyright Office, Compendium of U.S Copyright Office Practices § 101 (3d ed. 2021).

Vincent, J. (2022, December 1). OpenAI’s new chatbot can explain code and write sitcom scripts but is still easily tricked. The Verge. https://www.theverge.com/23488017/openai-chatbot-chatgpt-ai-examples-web-demo

WGA. (n.d.). Summary of the 2023 WGA MBA. https://www.wga.org/contracts/contracts/mba/summary-of-the-2023-wga-mba